Whats In A Test

Testing in layers

I posted an

article recently about how I changed my end to end automation test

methods as the underlying product changed. I made reference to unit

and component tests in that article. In this article

I describe some of that other testing and how it relates to the

feature architecture.

I posted an

article recently about how I changed my end to end automation test

methods as the underlying product changed. I made reference to unit

and component tests in that article. In this article

I describe some of that other testing and how it relates to the

feature architecture.

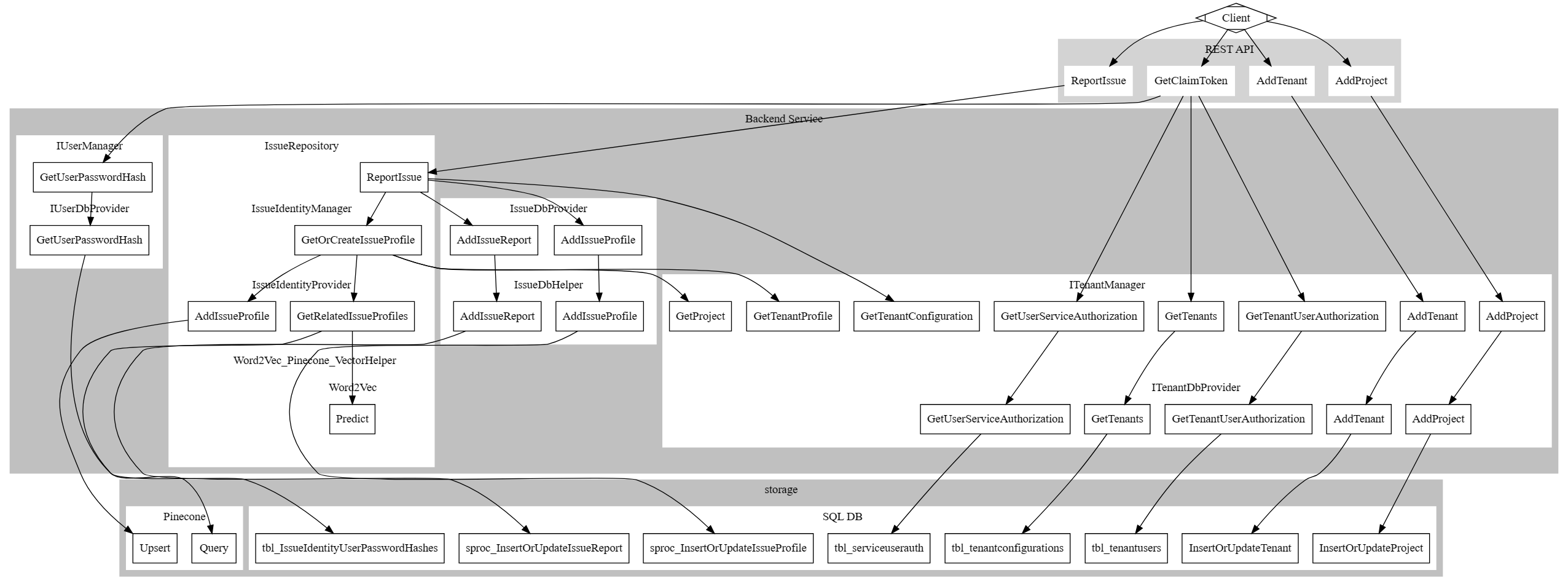

End to End Test Diagram

I want to start with the end to end testing of a feature called “Report Issue.” This feature is exposed via a REST API, and allows one to submit an issue report as a string, from which the system will either indicate the identity of a previous existing issue if reported before, or create a new issue and return the new identity.

The GET_ReportIssue() test procedure

The test method is relatively simple:

[TestMethod]

public void GET_ReportIssue()

{

string testMessage = "somethingnew";

string requestUri = IssueIdentityApiTestsHelpers.BuildReportIssueGetRequestUri(testMessage, UriBase, _sharedTenantId, _sharedProjectId);

WebRequest webRequest = IssueIdentityApiTestsHelpers.CreateGetRequest(requestUri, _adminToken);

WebResponse webResponse = webRequest.GetResponse();

using (var responseReader = new StreamReader(webResponse.GetResponseStream()))

{

string response = responseReader.ReadToEnd();

Console.WriteLine(response);

IssueProfile issueProfileResponse = JsonSerializer.Deserialize<IssueProfile>(response);

Assert.AreEqual(testMessage, issueProfileResponse.exampleMessage, "Fail if the example message of the returned issue is not what was sent.");

Assert.AreEqual(true, issueProfileResponse.isNew, "Fail if the issue was not reported as new.");

}

}

The method constructs a URI to the REST API, makes a request, and checks the return value. There are variables in the method which indicate some work that happens during test setup:

- _sharedTenantId: issue reports live in projects, which live in tenants, so a tenant is created during test setup

- _sharedProjectId: same as above

- _adminToken: a JWTBearer token which holds claims that both authenticate and authorize the user, fetched during test setup

ReportIssue() Product Behavior

Behind the REST API, the product code does the following:

- check tenant information to determine which role in the user claims aligns to the current tenant

- fetch word embeddings for the issue reported from a Word2Vec model

- query a vector database to see if any previously reported issues are within similarity threshold

- if nothing matches, store the embeddings vector database as a new issue

- if new issue, store the issue profile in the issue database

- store the issue report in the issue database

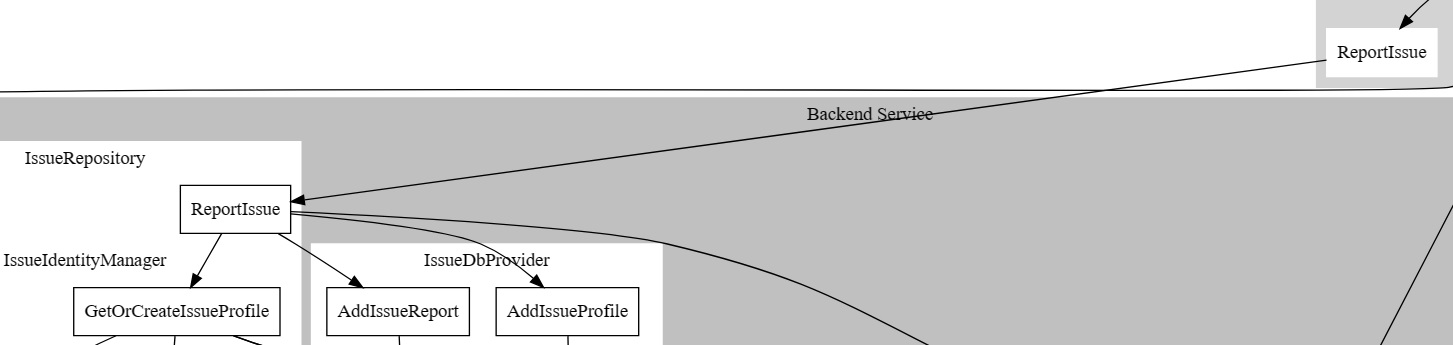

The product design supports an extensible, pluggable set of providers and interface implementations to allow execution on different storage systems, embedding solutions, or vector databases. A diagram of the current implementation, showing all the points of interaction during the single “webRequest.GetResponse()” call above is shown below:

The connection from Client to REST API on the “ReportIssue” bubble corresponds to the “webRequest.GetResponse()” call in the code excerpt above. The other connections from the client to REST API all correspond to test setup. I left test cleanup out of the diagram, as it was getting too cluttered.

Reflections on the Get_ReportIssue end to end test

I knew there was a lot going on behind the scenes when this test method executes, but I was still taken by surprise when I tried to draw the picture. It was richer, and more complex than I might have thought. It is even simplified. For example, the stored procedures that write data actually connect to tables from which later read operations happen, which is probably useful to demonstrate the integration relationship between operations. Those earlier writes to tenant and project tables affect the later call to ReportIssue that fetch information about the tenant.

Very early on with this test I might have had a few times where the main functional behavior, reporting an issue, failed to work correctly. There were not many of those instances, and the stopped failing there very quickly. There are more direct tests at the unit level which check that functionality piece by piece.

The bugs that came up during the end to end testing were mostly about integrating components together or doing multiple operations over time to complete longer sequences. Sql connection leaks show more easily, as the end to end tests leave the SUT running across iterations. Mistakes made creating a user token, or getting the right claims came up. Underlying SQL queries which were not filtering the WHERE clause precisely enough. All of these things slipped past the lower level tests, some because it slipped my mind at the time when writing the check, and some because the problem does not exist until all the components come together.

End to End Testing Interactively

If I can, I don’t start my end-to-end tests with automated scripts. I like to interact more directly. When I write code, I commit decisions into the script, and sometimes I want to change my mind more quickly than that.

One way I like to do that is with a command line programming language like PowerShell or Python. I prefer PowerShell, but Python is pretty spectacular. I do not really like either for production code, but for on the fly, ad hoc testing of things like product APIs, both are fantastic.

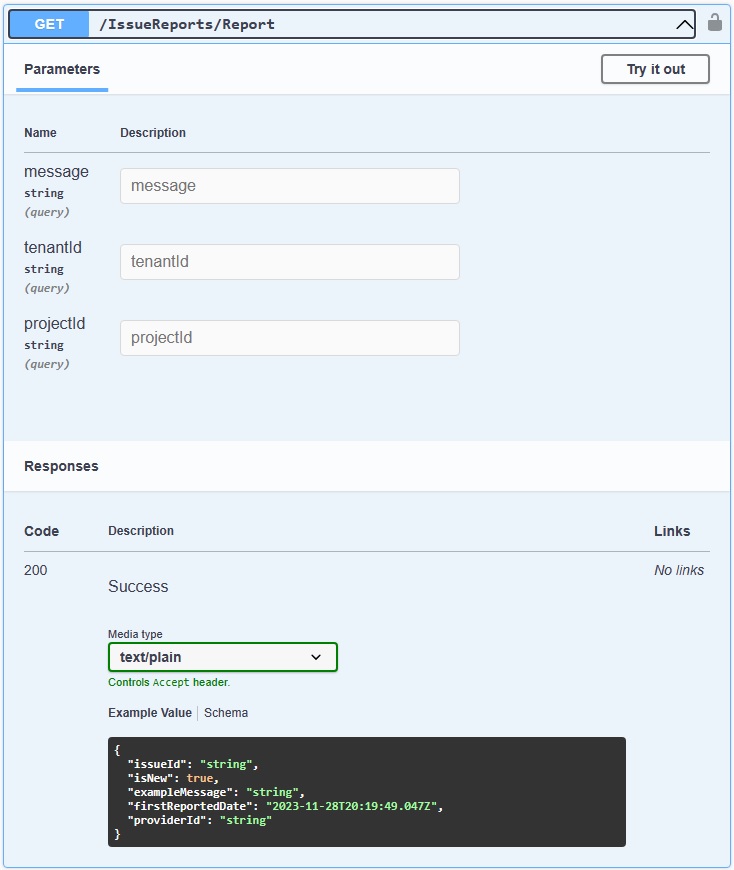

In this particular example, I tested using Swagger, which allows ASP.NET

REST API services to express their API as web-based user interface. Here is an example

of the Swagger interface for ReportIssue:

The backend behavior is the same for interacting with Swagger as it is for my test script. The difference is a lot more clicking, and I might deviate my behavior to investigate something. I typically have Swagger up on one screen and SQL Server Management Studio up on their other. Lots of copy and paste of data back and forth, as many of the item ids are GUIDs generated on the fly by the service. But I also use SQL queries to clean up data left behind.

I do a lot of the same when debugging with the scripts. Sometimes the script hits a failure in product or in itself, and I use the database to check backend state. Even with automation support, a lot of the time I am testing interactively.

Unit Tests for ReportIssue

Every single rectangle under “Backend Service” in the diagram above has a corresponding test or set of tests targeting it. The unit tests helped me track when something fundamental in the code breaks something fundamental enforced in some other test. Schema changes, meanings of different flags break a lot here. I also found that when I wanted to plum some piece of data through a stack of layers (e.g. I did not always track the “ProjectId” or “TenantId” for data isolation) that much of the tests would fail making those changes. Sometimes the test was the problem - but it was often enough in the product code that updating the tests was worth the cost.

IssueRepository - top level object

The following test method exercises the ReportIssue method in the IssueRepository class.

This class is a higher level class that utilizes together two interfaces, an IIssueDbProvider for storing issues and reports, and an IIssueIdentityProvider for determining whether an issue is new or previously reported. A mock is provided for both interfaces, with overrides specified in the test method for each of the methods called during ReportIssue. The mock is design so that any method not overridden will throw a NotImplementedException if called.

[TestMethod]

public void ReportIssue_newissue()

{

IssueReport passedInIssueReport = null;

IssueProfile passedInIssueProfile = null;

MockIssueDbProvider dbProvider = new MockIssueDbProvider();

dbProvider.overrideConfigure = (s) => { };

dbProvider.overrideAddIssueReportTenantConfigProjId = (i, t, s2) => { passedInIssueReport = i; };

dbProvider.overrideAddIssueProfileTenantConfigProjId = (i, t, s2) => { passedInIssueProfile = i; };

MockIssueIdProvider issueIdentityProvider = new MockIssueIdProvider();

issueIdentityProvider.overrideAddIssueProfile = (i, t, p) => { };

issueIdentityProvider.overrideGetRelatedProfilesTenantConfigProjId = (i, c, t, p) => { return new RelatedIssueProfile[] { }; };

issueIdentityProvider.overrideCanHandleIssue = (i) => { return true; };

issueIdentityProvider.overrideConfigure = (s) => { };

IssueRepository issueRepository = new IssueRepository(dbProvider, new IssueIdentityManager(new IIssueIdentityProvider[] { issueIdentityProvider }, null));

DateTime testdate = new DateTime(2000, 01, 02);

EmptyTenantManager emptyTenantManager = new EmptyTenantManager();

IssueProfile issueProfile = issueRepository.ReportIssue(new IssueReport() { IssueMessage = "testmessage", IssueDate = testdate }, emptyTenantManager.DefaultTenant(), string.Empty);

Assert.IsTrue(issueProfile.IsNew, "Fail if the issue is not new.");

Assert.IsNotNull(passedInIssueReport, "Fail if the passed in issue report is null.");

Assert.IsNotNull(passedInIssueProfile, "Fail if the passed in issue profile is null.");

Assert.AreEqual(testdate.ToString(), issueProfile.FirstReportedDate.ToString(), "Fail if the first reported date is not as expected.");

Assert.AreEqual("testmessage", passedInIssueProfile.ExampleMessage, "Fail if the example message is not expected value.");

Guid temp;

Assert.IsTrue(Guid.TryParse(passedInIssueReport.IssueId, out temp), "Fail if issue id is not a guid.");

Assert.AreEqual("testmessage", passedInIssueReport.IssueMessage, "Fail if did not get expected issue message on passedInIssueReport.");

}

The point of the IssueRepository is to provide the key business logic for the ReportIssue behavior, independent of storage technology or service platform. Implementations of IIssueIdentityProvider and IIssueDbProvider might enable execution SQL, MySql, PineCone, Word2Vec, various Azure storage solutions, AWS services, or a variety of other technology.

I had this same unit test for quite a long time. At one point I stood the service up on Azure. The entire IssueRepository testing code stayed the same when I shifted first from Sql to Azure, and then back to Sql. Likewise for all the other unit tests that were higher than the technology specific providers. I knew that even as I changed or shifted supporting platforms I could still check my business logic.

IIssueDbProvider - GetIssueProfile()

I am going to skip the IssueIdentity layer between IssueRepository and IIsueDbProvider because I used the same testing ideas there as in IssueRepository. A more interesting thing happes at IIssueDbProvider where the product code gets closer to the database layer. I am also going to shift to GetIssueProfile() because as a read operation it has to return data which the write operations (like AddIssueProfile) do not. That is interesting to look at.

It is almost impossible to separate all business logic from data storage, and it is difficult to test data tier business logic in unit tests running during build processes that do not have access to the storage platform. I like to do as little business logic in the database as I can, except in cases where the database is better at the heavy lifting.

To support this, the IIssueDbProvider implementation for SQL server takes a helper object. All the sql connections and queries are handled by the helper. The IIssueDbProvider does all the conversion of query results to the classes and data types used by the upper level tiers. This allows testing of all business logic except for IO in unit tests, in memory.

To do this, the mock below returns a DbDataReader object. This keeps the SQL IIssueDbProvider from having to deal with sql connections itself - the data can be constructed in memory if needed.

public void GetIssueProfile()

{

DateTime nowTime = DateTime.Now;

MockSqlIOHelper mockSqlIOHelper = new MockSqlIOHelper();

mockSqlIOHelper.overrideGetIssueProfile = (s, t, p) =>

{

DataTable dt = new DataTable();

dt.Columns.Add("Id", typeof(string), string.Empty);

dt.Columns.Add("ExampleMessage", typeof(string), string.Empty);

dt.Columns.Add("FirstReportedDate", typeof(DateTime), string.Empty);

dt.Rows.Add("testissue", "testmessage", nowTime);

return dt.CreateDataReader();

};

SqlIssueDbProvider sqlDbProvider = new SqlIssueDbProvider(mockSqlIOHelper);

IssueProfile issueProfile = sqlDbProvider.GetIssueProfile("testissue", new TenantConfiguration(), string.Empty);

Assert.AreEqual("testissue", issueProfile.IssueId, "Fail if issue profile id does not match expected.");

Assert.AreEqual("testmessage", issueProfile.ExampleMessage, "Fail if example message does not match expected.");

Assert.AreEqual(nowTime.ToString(), issueProfile.FirstReportedDate.ToString(), "Fail if date does not match expected value.");

}

The advantage here is we can isolate platform and technology specific business logic from that which is independent of technology or platform.

There is a challenge to this approach. Note that the override on the mock is creating a data table schema. When you write tests this way, it is very easy for the data table schema to get out of line with the real product schema. That misalignment may or may not affect test behavior. One way to avoid this, which I have not implemented here, is to create a method on the product which creates a datatable in the expected schema format.

No longer doing Unit Tests

I prefer maintaining a strict definition for unit tests. Avoid as much as possible cross component integration. Test as little as possible in each check. Do not test anything which cannot run in memory exclusively during something like a build process - in other words, no IO to file systems, the network, a database, or any external process.

This means I need some non-unit tests. The first example are those which test the IO interaction. I try to reduce the logic to as little as possible, only enough to send or receive IO from some source. Beyond that, I write smaller integration tests that tie multiple units or components together, but far short of a complete end to end system. I skipped the latter class of those for this project, so I finishing off my examples with an IO provider.

SqlDbIOHelper - GetIssueProfile as in IO provider test

Testing SqlDbIOHelper is something that can no longer fit within the strict constraints of a unit test. We are testing SQL queries, so there needs to be a SQL server running with the proper tables, schema, security, roles, and stored procedures configured. I run this test separately from my unit tests. At the moment I run it at home on my own machine. Were this at work, I would have a lab and have procedures to deploy the SQL server and configure it. Right now I do all of that myself prior to execution.

The test below is more rudimentary than I would like. There are many properties on an issue profile, and this one only checks the ID. Lots of bugs to miss here, so I probably should tend to some cleanup.

The key point of this example is the pattern. It uses the interface the same way that IIssueDbProvider does. It expects a DbDataReader object from the call to GetIssueProfile, and from there it pulls the information it needs from the reader.

Notice an important part, and a reason why this is not a unit test. Prior to making the call to the tested method (GetIssueProfile) the procedure calls “AddIssueProfile” to put data in the database. A unit test would not do that. Instead, whatever IO provider fetches the data would be overridden to present whatever the test needed as if the database had been populated. We are testing stateful IO now, and our test has to jump up a layer of complexity.

[TestMethod]

public void GetIssueProfile()

{

SqlIssueDbIOHelper sqlIssueDbIOHelper = new SqlIssueDbIOHelper(SqlConfig);

sqlIssueDbIOHelper.AddIssueProfile(

new IssueProfile() { ExampleMessage = "test msg", FirstReportedDate = DateTime.Now, IsNew = true, IssueId = "testissue1" },

tenantConfiguration,

projectId);

string issueId = string.Empty;

using (DbDataReader reader = sqlIssueDbIOHelper.GetIssueProfile("testissue1", tenantConfiguration, projectId))

{

while (reader.Read())

{

issueId = reader["Id"].ToString().Trim();

break;

}

}

Assert.AreEqual("testissue1", issueId, "Fail if we do not get the expected issueid.");

}

Reference Material

If you want to see the DOT code that draws the diagram, you can follow the link here. DOT is my preferred language for quick graph sketches.

All of the code above is stored in my DupIQ github repository. It is almost certain that if it has been a while since this article was published that some of the code will change, so consider this a snapshot of a moment in time.